TL;DR / Key Takeaways

- Big‑O abstracts growth rates; constant factors don’t matter, dominant terms do.

- Count elementary operations or comparisons and translate to Θ‑notation.

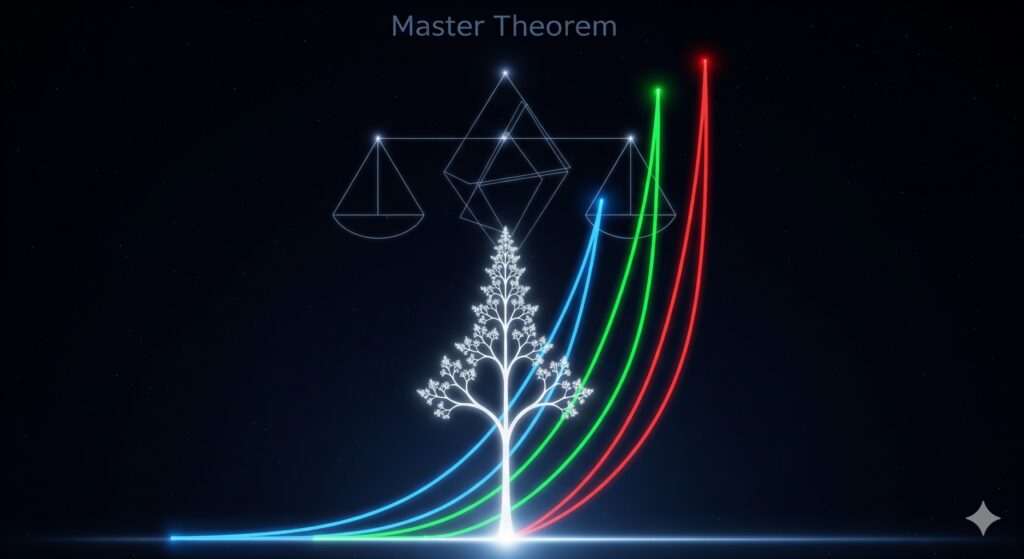

- Many divide‑and‑conquer algorithms fit T(n) = a T(n/b) + f(n); use the Master Theorem to classify.

- Benchmark properly: warmup, multiple trials, median/p95; compare curves (n, n log n, n², n³).

Introduction

Complexity analysis predicts how algorithms scale. We’ll build intuition by counting operations, contrasting growth rates, and solving common recurrences with runnable helpers. Related reads: Sorting & Searching; Recursion & Backtracking; Divide & Conquer patterns.

Background

- Asymptotic notation: O (upper bound), Ω (lower bound), Θ (tight bound).

- Dominance: n log n eventually beats n²; log factors grow slowly; exponent growth dominates.

- Divide & Conquer: split size by b, solve a subproblem, add, and combine work f(n).

Deep Dive

Counting Operations (illustrative)

# operation_counts.py — simple counters for loops

def count_linear(n: int) -> int:

c = 0

for _ in range(n):

c += 1

return c

def count_quadratic(n: int) -> int:

c = 0

for i in range(n):

for j in range(n):

c += 1

return c

print({"n": 1000, "O(n)": count_linear(1000), "O(n^2)": count_quadratic(100)})

Compare Growth Curves

# growth_curves.py — compare n, nlogn, n^2, n^3 for selected n

import math

def curves(ns):

out = []

for n in ns:

out.append({

"n": n,

"nlogn": int(n * (math.log2(n) if n>0 else 0)),

"n2": n*n,

"n3": n*n*n,

})

return out

print(curves([8,16,32,64]))

Master Theorem Helper + Examples

We analyze recurrences T(n) = a T(n/b) + f(n) by comparing f(n) with n^{log_b a}.

# master_theorem.py — classify T(n) = a T(n/b) + f(n)

import math

def classify(a: int, b: int, f_exp: float, f_polylog_k: int = 0) -> str:

"""Return case description.

f(n) ≈ n^{f_exp} (log n)^{k}; supply k via f_polylog_k.

"""

assert a >= 1 and b > 1

nlog = math.log(a, b)

if f_exp < nlog - 1e-9:

return "Case 1: T(n) = Θ(n^{log_b a})"

elif abs(f_exp - nlog) <= 1e-9:

if f_polylog_k >= 0:

return "Case 2: T(n) = Θ(n^{log_b a} (log n)^{%d})" % (f_polylog_k + 1)

else:

# f(n) dominates. Need regularity condition: a*f(n/b) ≤ c f(n)

return "Case 3 (if regularity holds): T(n) = Θ(f(n))"

return "Edge case"

# Examples:

print({

"merge_sort": classify(a=2,b=2,f_exp=1), # T(n)=2T(n/2)+Θ(n) → Θ(n log n)

"binary_search": classify(a=1,b=2,f_exp=0), # T(n)=T(n/2)+Θ(1) → Θ(log n)

"karatsuba": classify(a=3,b=2,f_exp=1), # T(n)=3T(n/2)+Θ(n) → Θ(n^{log_2 3})

"strassen": classify(a=7,b=2,f_exp=2), # T(n)=7T(n/2)+Θ(n^2) → Θ(n^{log_2 7})

"linearithmic": classify(a=2,b=2,f_exp=1, f_polylog_k=0),

})

Recursion Tree (merge sort)

flowchart TD A["T(n)"] --> B["T(n/2)"] A --> C["T(n/2)"] A --> D["merge: O(n)"] B --> E["T(n/4)"] B --> F["T(n/4)"] B --> G["O(n/2)"] C --> H["T(n/4)"] C --> I["T(n/4)"] C --> J["O(n/2)"] G --> K["O(n)"] J --> L["O(n)"]

Best Practices

- Prove with bounds: start from a count, then simplify to Θ(·).

- Benchmark with medians and percentiles; avoid single‑run conclusions.

- Prefer n log n over n² when feasible; confirm constants and memory.

- Use the Master Theorem when f(n) is polynomial times polylog; otherwise, use Akra–Bazzi or recursion tree.

Real‑World Scenarios

- Picking sort algorithms: n log n sorts (merge/heap/quick avg) vs. n² for small n, or counting/radix for known domains.

- Searching strategy: binary search over sorted data vs. hash‑table lookups (expected O(1)) with collision caveats.

Measuring and Signals

- Track asymptotic class and constant factors; memory footprint vs. cache locality.

- Compare time complexity on representative sizes; ensure curve shape matches theory.

# micro_bench.py — toy micro-benchmark (illustrative only)

import time

def bench(fn, n, trials=5):

best = float('inf')

for _ in range(trials):

t0 = time.perf_counter(); fn(n); dt = time.perf_counter()-t0

best = min(best, dt)

return best

print("ok") # placeholder; avoid slow runs here

Implementation Checklist

- Identify the operation to count (comparison, assignment, memory access).

- Derive T(n) and simplify to Θ(·); document assumptions.

- Benchmark across sizes; plot or log summaries; validate shape.

- Choose algorithms that meet time/memory constraints.

Conclusion

Complexity analysis guides algorithm choices. With a few reusable tools—counts, growth curves, and the Master Theorem—you can reason about performance and pick the right approach for your constraints.

This article is part of the Algorithms Mastery Series. Next: “Recursion & Backtracking (templates)”.