TL;DR / Key Takeaways

- Separate presentation, application, domain, and infrastructure layers; isolate the domain with ports and adapters (hexagonal).

- Move cross‑cutting concerns (auth, logging, metrics, retries) to middleware, interceptors, gateways, or a service mesh.

- Define microservice boundaries by bounded contexts; own your data; prefer async events over chatty sync chains; avoid shared databases.

- Test at the right levels: unit (domain), application (use cases), contract (provider/consumer), integration (adapters), thin E2E.

- Includes diagrams, code, and a checklist to operationalize SoC without over‑engineering.

Introduction

Every time a simple change forces you to wade through unrelated code, you’re paying a tax for poor separation of concerns (SoC). As systems scale—teams, features, deployments—this tax compounds. Good separation reduces cognitive load, accelerates delivery, and makes reliability practices (testing, observability, security) practical.

This post turns SoC from a textbook concept into actionable patterns. We’ll walk through layered architectures, ports-and-adapters, cross-cutting concerns (logging, auth, metrics), microservice boundaries, and testing strategies that keep systems modular without over-engineering.

Background: What Separation of Concerns Really Means

Separation of concerns is organizing software so that each part addresses a distinct responsibility with minimal overlap. It applies at many levels:

- Within a function: distinct steps and error handling

- Within a module: clear APIs and data ownership

- Across layers: UI, application, domain, infrastructure

- Across services: well-defined service boundaries and contracts

Goal: make changes local and the blast radius predictable.

Deep Dive: Practical SoC Patterns

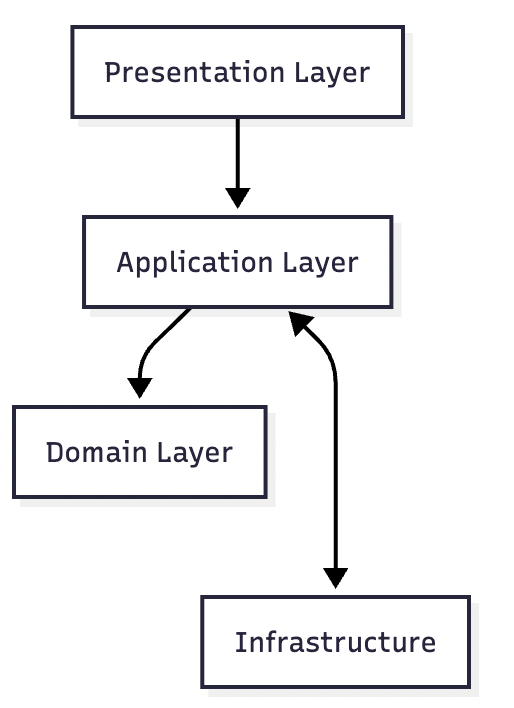

1) Layered Architecture Patterns

Start with the simplest layered approach your team understands and evolve as needed.

Mermaid: Minimal 3-layer flow

Key ideas:

- Presentation: endpoints/controllers, DTOs, input validation

- Application: use cases, orchestration, transactions

- Domain: entities, value objects, policies, domain events

- Infrastructure: DBs, queues, HTTP clients, file systems

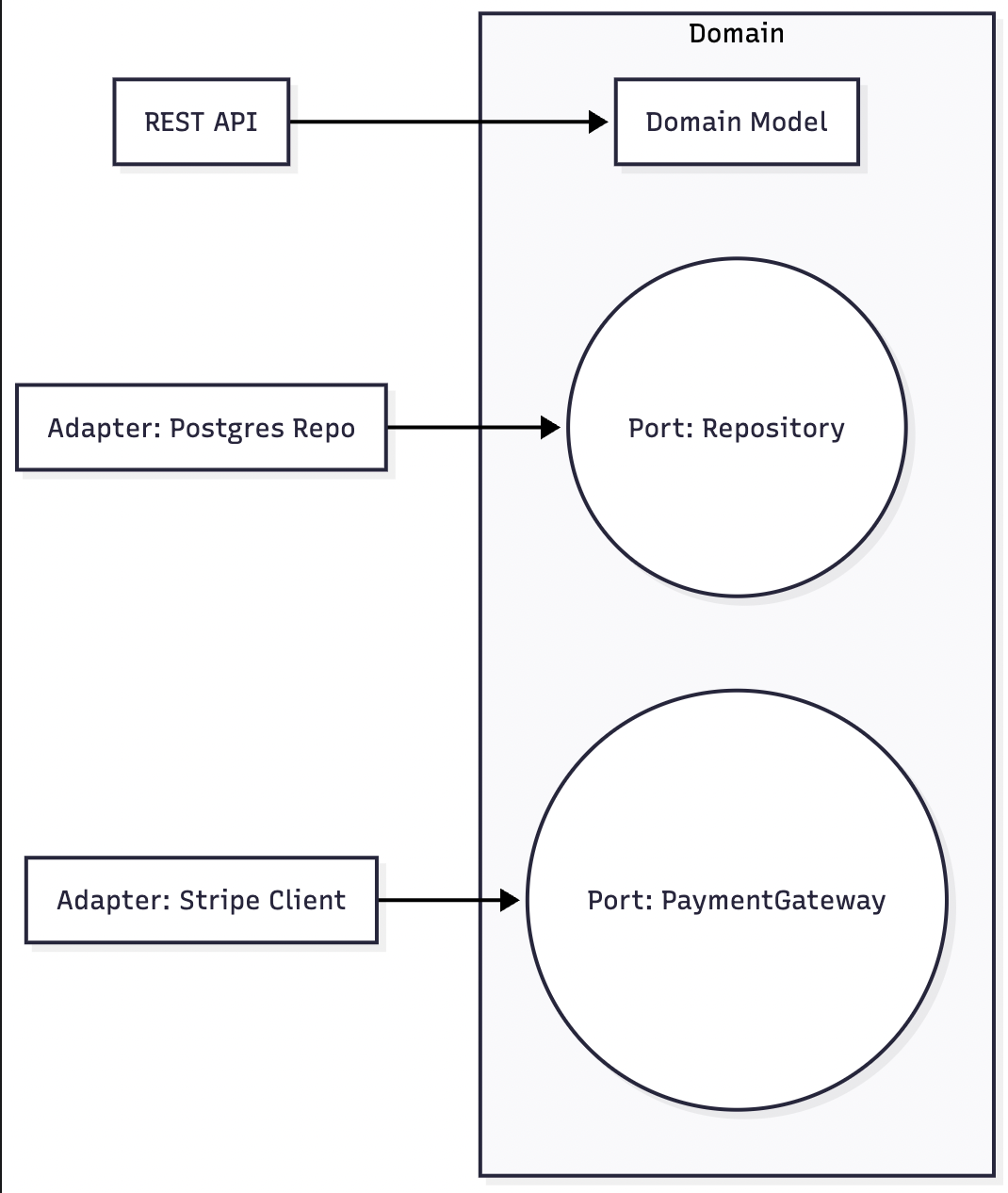

Ports-and-Adapters (Hexagonal) sharpens these boundaries by making the domain independent from I/O.

Example in TypeScript (domain interface + adapter):

// Domain port

export interface OrderRepository {

save(order: Order): Promise<void>;

byId(id: string): Promise<Order | null>;

}

// Infrastructure adapter

export class PgOrderRepository implements OrderRepository {

constructor(private readonly db: Pool) {}

async save(order: Order) {

await this.db.query("insert into orders(id, total) values($1, $2)", [order.id, order.total]);

}

async byId(id: string) {

const res = await this.db.query("select id, total from orders where id=$1", [id]);

return res.rowCount ? new Order(res.rows[0].id, res.rows[0].total) : null;

}

}

Why it helps: the domain doesn’t know about SQL or HTTP; tests can stub OrderRepository without spinning up Postgres.

2) Managing Cross‑Cutting Concerns (AOP, Middleware, Interceptors)

Cross‑cutting concerns are behaviors needed in many places: logging, auth, metrics, retries, tracing. Keep them out of business logic.

Option A: AOP (Java/Spring) — great for policy‑like concerns.

// Spring Boot AOP example: timing + logging

@Aspect

@Component

public class TimingAspect {

@Around("execution(* com.example.app..*(..)) && @annotation(org.springframework.web.bind.annotation.RequestMapping)")

public Object time(ProceedingJoinPoint pjp) throws Throwable {

long start = System.nanoTime();

try {

return pjp.proceed();

} finally {

long ms = (System.nanoTime() - start) / 1_000_000;

System.out.println("Handled " + pjp.getSignature() + " in " + ms + "ms");

}

}

}

Option B: Middleware (Node/Express) — explicit, easy to reason about.

// Express: auth + correlation id + logging

app.use((req, res, next) => {

req.correlationId = req.get('x-correlation-id') || crypto.randomUUID();

next();

});

app.use((req, res, next) => {

if (!req.headers.authorization) return res.status(401).send('Missing token');

// ...verify token, attach user to req

next();

});

app.use((req, res, next) => {

const start = Date.now();

res.on('finish', () => {

console.log(req.method, req.path, res.statusCode, Date.now() - start, 'ms', req.correlationId);

});

next();

});

Guidelines:

- Prefer middleware/interceptors for request pipelines; AOP for cross‑cutting rules

- Make cross‑cutting behavior configurable but not leaky (no business logic)

- Keep policy definitions near platform edges (API gateway, service mesh) when possible

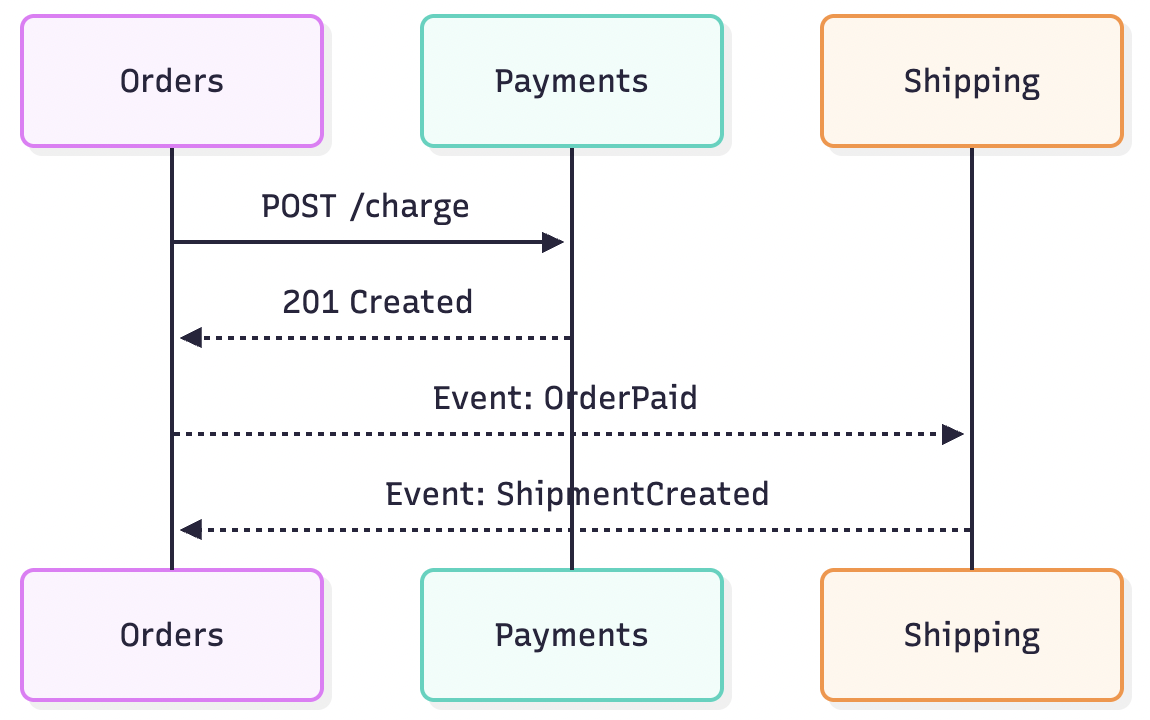

3) Microservices: Defining the Right Boundaries

Service boundaries should align with the domain (bounded contexts), not database tables or teams.

Anti‑patterns to avoid:

- Distributed monolith: services that share the same DB or deploy/release together

- Chatty services: too many synchronous calls; prefer async events for low‑coupling flows

- Generic “shared” service that becomes a bottleneck

Minimal boundary checklist:

- Single owner and roadmap per service

- Independent deployability and data ownership

- Clear API and asynchronous events as needed

- Observability: per‑service logs, metrics, traces

4) Testing Strategies for Well‑Separated Systems

Test the right thing at the right level.

- Unit tests: domain rules without I/O

- Application tests: use case orchestration with stubbed ports

- Contract tests: verify provider/consumer expectations (e.g., JSON schema, API tests)

- Integration tests: adapters against real infra (DB, queue)

- End‑to‑end: thin smoke tests for core flows

Example: contract‑style API test (TypeScript + supertest)

import request from 'supertest';

import { app } from '../app';

describe('GET /orders/:id', () => {

it('returns 200 with expected shape', async () => {

const res = await request(app).get('/orders/123').expect(200);

expect(res.body).toMatchObject({ id: expect.any(String), total: expect.any(Number) });

});

});

5) Domain‑Driven Design for Separation

DDD gives names to boundaries, improving clarity and collaboration.

- Bounded Contexts: distinct models and language per subdomain

- Aggregates: transactional consistency boundaries

- Domain Services: behaviors that don’t fit neatly on an entity

- Anti‑corruption Layer: translate between contexts without leaking models

Python sketch: domain core isolated from frameworks

# domain/order.py

@dataclass

class Order:

id: str

items: list[LineItem]

def total(self) -> int:

return sum(i.subtotal() for i in self.items)

# app/use_cases.py

class CreateOrder:

def __init__(self, repo: OrderRepository):

self.repo = repo

def execute(self, cmd: CreateOrderCommand) -> str:

order = Order.new(cmd.items)

self.repo.save(order)

return order.id

6) Operationalizing Cross‑Cutting Concerns

Practical places to centralize cross‑cutting logic:

- API Gateway: authn/z, rate limiting, request shaping

- Service Mesh/Sidecars: mTLS, retries, timeouts, observability

- Libraries/Middleware: consistent logging, metrics, tracing

- CI/CD: security scans, lint rules, dependency policies

Keep business rules out of these layers; they should remain policy‑oriented.

Best Practices

- Start with a modular monolith; extract services when pressures justify it

- Isolate domain from I/O through ports; keep edges thin

- Centralize cross‑cutting concerns using middleware, gateways, or sidecars

- Prefer async events to reduce coupling between services

- Keep service and domain boundaries reflected in repo structure

- Add contract tests where teams meet; automate schema checks

Real‑World Scenarios (Before → After)

1) Logging sprawl → Middleware

- Before: ad‑hoc logging in controllers and services

- After: request logging middleware with correlation IDs; cleaner code, consistent logs

2) Shared DB → Owned Data

- Before: Orders, Payments, and Shipping share the same database

- After: Each service owns its schema; publish/subscribe events connect flows

3) UI‑heavy logic → Use Case Layer

- Before: controllers orchestrate complex workflows

- After: the application layer exposes

PlaceOrder,RefundPayment; controllers are thin

Measuring Design Quality and Complexity (Signals)

- Change locality: small feature touches a few modules/services

- Coupling: fewer cycles in dependency graphs; limited shared libraries

- Cohesion: modules have a single purpose; fewer “misc” utils

- Test shape: fast unit tests dominate; E2E focused on happy path

- Observability: per‑concern signals (domain vs. infra) are easy to isolate

These are signals for conversation—not absolute scores.

Implementation Checklist

- Define layers and ports (draw a quick diagram)

- Extract cross‑cutting to middleware/interceptors

- Introduce domain interfaces for infra (repos, clients)

- Co‑locate domain code; isolate frameworks at edges

- Add contract tests at boundaries; unit tests for rules

- Document service boundaries and event contracts

Conclusion

Separation of concerns scales teams as much as it scales code. Keep the domain clean via layers and ports, push cross‑cutting concerns to the edges, and draw boundaries that map to the business—not the database. With the right tests in the right places, you can change behavior confidently without pulling a thread that unravels the whole system.

This article is part of the Software Architecture Mastery Series. Next: “Gang of Four Patterns for Modern Developers”